Sharding: Throughput, Scalability and Why We Need Them

TL;DR

In distributed ledger technologies, scalability indicates the capability of a system to increase its throughput as more demand is generated. Blockchain sees scalability as a big challenge due to its ledger design which imposes that transactions are totally ordered. In this blog post, we first expand more on the differences between scalability and throughput; then, we explore the sharding solution, in which messages are delivered to only a subset of network nodes, reducing redundancy to build a high-performance network.

Once upon a time, the two cities Tangle City and Las Iota were connected via a two-lane highway. It was the only route through which cars could move between the two cities. Over time, the cities grew, leading to lengthy traffic jams on the highway. One sunny day, the mayors of the two cities decided it was time to tackle the issue. Their first idea was: “Let’s build a bigger highway with four lanes instead of two!”. It sounded like a good idea, but the implementation proved to be difficult: a river, an old church, and a stubborn farmer living near the main road threw up several obstacles on the way to finalization. Nevertheless, the highway was finally completed. However, it did not solve the traffic problem: cars still had to pass through the same bottleneck and the two cities kept growing, which soon presented the two mayors with the same old problem. Should the mayors once again build bigger highways to ensure a strong connection between Tangle City and Las Iota? Or are there other solutions?

We have just described the concept of throughput and the related congestion due to increased demand in a non-scalable solution. Too often, these two concepts – throughput and scalability – are confused in the context of distributed ledger technology (DLT).

Throughput indicates how much information can be processed, validated and delivered in the network per unit of time, and it is measured in bytes per second (in the case of variable-sized messages, the commonly used metric transactions per second is only an approximated way of measuring the throughput). In the example above, throughput is the equivalent of the number of highway lanes.

Scalability, on the other hand, is the capability of the network to deal with a growing amount of work by adding resources to the system, e.g., by increasing the number of nodes. While the two mayors improved throughput by enhancing the existing road infrastructure, growing demand soon reached full capacity once more. So, various alternatives should have been considered to improve the lives of citizens: for instance, building additional highways, replacing cars with more efficient public transport (e.g., buses or trains), building new cities to distribute citizens without creating bottlenecks in big metropoles and so on. In this blog post, we will clarify the definition of scalability and explain how it affects DLTs.

The term "scalability" is applicable to a wide range of contexts and domains, such as routing protocols, distributed systems, networks, databases and business models. In the context of DLT, we are interested in load scalability, which is the ability of a distributed system to expand (and contract) to accommodate heavier (or lighter) loads. For instance, Bitcoin cannot be defined as a scalable DLT: this is by design because security has been preferred over performance. In fact, independently of the number of messages awaiting confirmation and the number of nodes in the network, the total throughput and the finality time remain unchanged. Some recent solutions have tried to overcome this issue by, for instance, adding a Lightning Network on Layer 2 in order to create off-chain payment channels to reduce the burden on the main chain.

While outsourcing transactions to another layer easily increase the overall throughput, the scalability challenge of the main chain is still not addressed: Opening and closing Lightning channels still require one transaction each on the base layer. So, even if the base layer is only used to open and close Lightning channels and all other transactions occur in the Lightning channels, scalability would still be limited to the number of Lightning channels that can be opened and closed, which, in the Bitcoin network, currently amounts to five to seven per second. Bitcoin has basically added a second layer of high-speed highway lanes above the actual highway, but people still need to take the same onramps to even get onto the highway before they can use the high-speed lanes.

Other solutions do not embark on outsourcing throughput to additional layers but boast the capability of the main chain to support several hundred thousand transactions per second. That, however, comes with a different challenge: If a single transaction has a size of just 1024 bytes, a mere 250,000 of those transactions per second would equate to two nodes having to be connected through a high-speed data connection allowing for more than 2 Gbps ¹. This would lead to heavily limiting participation in a decentralized network to the ones being able to afford expensive infrastructure. This solution could therefore be pictured as a superhighway with hundreds of lanes, but difficult and extremely expensive to build on and with offramps under the control of a few select wealthy people.

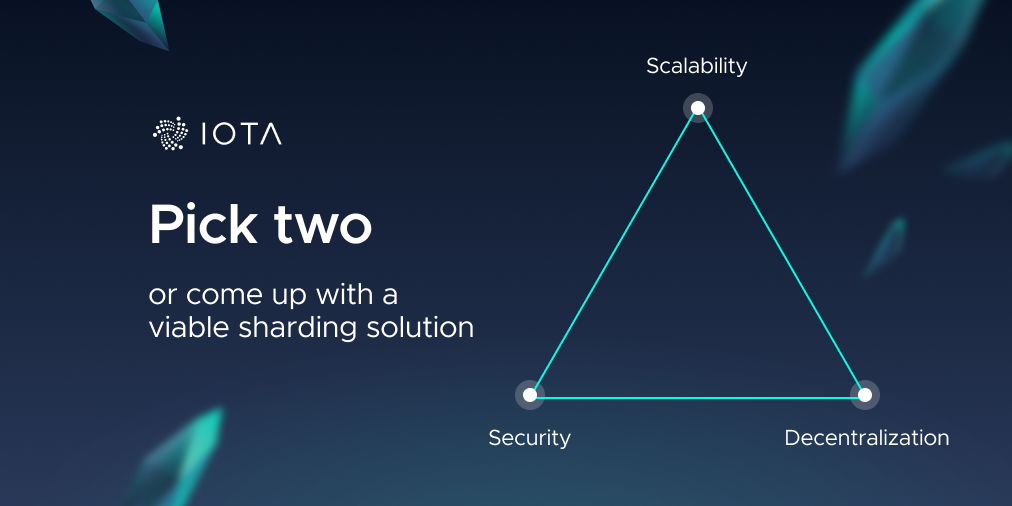

To achieve scalability, the blockchain trilemma (which states that one can only have two choices between decentralization, security, and scalability), must be overcome. Bitcoin chooses security and decentralization but falls short on scalability, while other competitors choose security and scalability but fall short on decentralization. So how can one retain all three characteristics?

The most popular solutions to address scalability in DLTs are represented by a concept called sharding: splitting up the total amount of transactions into smaller partitions. The concept of "shard" was introduced in 1988 by the technical report “Overview of SHARD: a System for Highly Available Replicated Data” ², which aimed to use redundant hardware to facilitate data replication. With sharding in DLT, we usually refer to horizontal partitioning, where rows of a database, forming a single partition, are separately held by different servers. Currently, sharding solutions are being developed by several blockchain projects including Ethereum, Zilliqa, and Polkadot.

Sharding in DLTs allows nodes to validate and store only a subset of the messages exchanged in the network. Because the redundancy is reduced, the number of messages that the network can handle increases compared to a non-sharded solution. However, with sharding, an attacker can more easily gain a larger influence within a single shard, as the security of the shard is directly proportional to the cumulative hash-rate (for Proof of Work systems) or to the total stack (in Proof of Stake and similar systems). In essence, by splitting up the number of transactions into chunks, there is a risk to also split up the security features and thus reduce the security per individual shard, unless some mitigation mechanism is introduced.

The directed acyclic graph (DAG) structure of the IOTA ledger provides an excellent starting point to build a scalable DLT. A DAG-based ledger does not introduce the conceptual bottleneck present in the blockchain where all messages are subject to total ordering, which leads to unavoidable frictions in the protocol: In IOTA, there is no central leader that defines the creation of the next block of transactions. Furthermore, IOTA is a leaderless protocol, meaning that any node can have a different perception of the status quo of the ledger state while still agreeing on which transactions are valid and which are not. As a result, in IOTA more messages mean faster finality!

However, as discussed above, resources are also limited in IOTA so a solution to the load scalability challenge is still required. The IOTA protocol is lightweight and does not require fees, which provides a fundamental advantage compared to similar technologies. The research department of the IOTA Foundation is studying solutions that, according to the IOTA vision, exploit its full potential. We look forward to sharing our findings soon.

¹ This calculation ignores the always-present networking overhead and the fact that nodes in a peer-to-peer network are usually connected to multiple nodes. In practice, such competitor networks would have to rely on nodes being able to afford at least 10 Gbps connections.

² Currently, no copies of the report are easily available. Another interpretation is that the name “shard” comes from the video game Ultima Online.

Follow us on our official channels for the latest updates:

Discord | Twitter | LinkedIn | Instagram | YouTube